Unsatisfied with media coverage concerning artificial intelligence, researcher

Zachary C. Lipton launched the blog Approximately Correct to do justice to his subject. According to Lipton, media coverage of artificial intelligence is based on approximate data and discusses speculative prophecies to the detriment of the true nature of AI innovation.

In an article entitled “AI’s PR problem,” Jerry Kaplan, an entrepreneur, writer and futurist based in San Francisco, posits that Artificial intelligence is the victim of fantastic exaggerations and misconceptions of all kinds. According to Kaplan, recent progress made in this sector has been susceptible to embellishment by the media giving it a false image. Because of this, technical advances as diverse as AlphaGo, self-driving cars and voice-activated virtual assistants such as Siri and Alexa, are synonymous with the progressive development of super intelligence that is on its way to surpassing even the human mind.

“While it’s true that today’s machines can credibly perform many tasks (playing chess, driving cars) that were once reserved for humans, that doesn’t mean that the machines are growing more intelligent and ambitious. It just means they’re doing what we built them to do.” says Kaplan.

According to Kaplan, recent successes in artificial intelligence can be attributed to highly distinct technological advances: the traditional approach and machine training or machine learning in the language of Alan Turing), but “neither of these approaches constitute the holy grail of artificial intelligence.”

Fantasies and Exaggerations

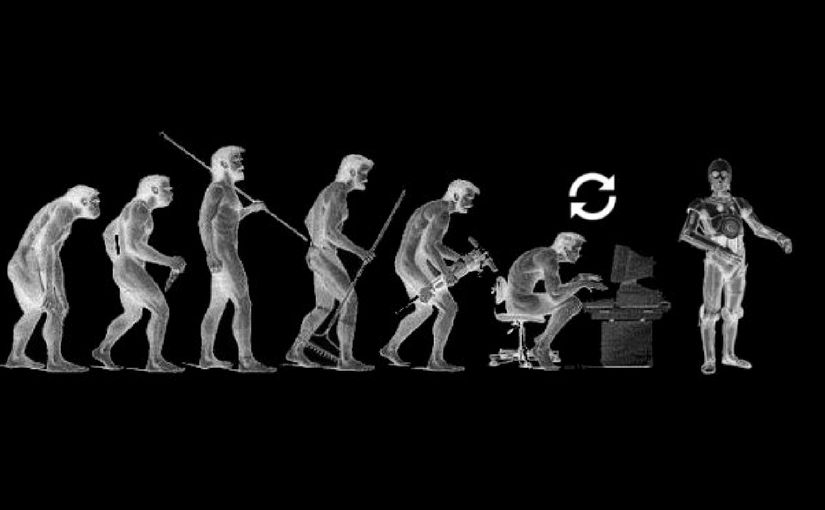

Kaplan is not alone. Zachary Chase Lipton, a specialized artificial intelligence researcher that will occupy a seat as Assistant Professor in Machine Learning at Carnegie Mellon University next fall launched a blog entitled Approximately Correct to deal with the misconceptions surrounding AI. Frustrated with the incessant flow of errors and assumptions in the media regarding his subject of study, the young researcher started the blog to discuss the topic in a way that is at once accurate and accessible to a large audience. According to Lipton, the articles he’s seen dedicated to the subject are sensationalist and echo old fantasies brought about by science fiction and pop culture to cater to readers, regardless of whether or not they represent reality.

“Recently, OpenAI (a non-profit research association dedicated to artificial intelligence and founded by Elon Musk and Sam Altman) published a research paper dedicated to an approach entitled Evolution Strategies. It’s a variation on neural networks which uses a different technique, vaguely inspired by evolution theory in order to ameliorate the algorithm over time. The approach is very interesting but media coverage of the OpenAI paper would have readers believe that we are developing autonomous artificial agents and that they are evolving like living organisms susceptible of escaping control.”

Lipton adds that many journalists put serious academic study on the backburner when discussing AI, instead focusing on grandiloquent affirmations without scientific value.

“As soon as an article revolves around artificial intelligence, writers rarely take the initiative to doubt the validity of such grandiose statements. A prophecy made by Ray Kurzweil is then placed on the same plane as the most recent work done by scientists.”

Ray Kurzweil, a key figure in the transhumanist movement, contributed to the popularization of the notion of “The singularity,” an idea taken from science-fiction author Vernor Vinge. This theory postulates that in the far future, humans will witness the advent of super-intelligent machines that surpass human ability as well as their own programmed fusion with such computers inaugurating a post-human era run by machines. Ray Kurzweil is equally recognized for a number of predictions regarding the evolution of new technologies in his published works. Some of his predictions have come true (the dot.com boom, predicted in 1980), while others were a bit ambitious (autonomous vehicles hitting the mass market by 2009), and some proved to be entirely false (the disappearance of the paperback).

Ray Kurzweil, a key figure in the transhumanist movement, contributed to the popularization of the notion of “The singularity,” an idea taken from science-fiction author Vernor Vinge. This theory postulates that in the far future, humans will witness the advent of super-intelligent machines that surpass human ability as well as their own programmed fusion with such computers inaugurating a post-human era run by machines. Ray Kurzweil is equally recognized for a number of predictions regarding the evolution of new technologies in his published works. Some of his predictions have come true (the dot.com boom, predicted in 1980), while others were a bit ambitious (autonomous vehicles hitting the mass market by 2009), and some proved to be entirely false (the disappearance of the paperback).

In his book, The Myth of the Singularity, French researcher Jean-Gabriel Ganascia, blames Kurzweil for his lack of seriousness concerning his predictions of the singularity, based entirely on extrapolations of the Moore law of living evolution. According to Lipton, the theory of the singularity embodies the problem with the media’s portrayal of artificial intelligence. Fantastical and unrealistic, it echoes images from a collective imagination grounded in science fiction and is often an element that guarantees the popularity of an article and its shares on social media. Even though the singularity doesn’t at all have the same scientific validity as the research being done on artificial intelligence. Actual research is less mediatized because it’s difficult to sell something that doesn’t have that fantastical element.

Predictive algorithms, killer robots and automation

This brings us to the heart of the problem. For Lipton, this tendency to use a phantasmagorical vision of artificial intelligence to drive any narratives related to AI is a hindrance to the public because it hides the true nature of the development of the technology. Worrying about the risks posed by an artificial intelligence taking over humanity also leads to the underestimation and even complete ignorance of actual risks.

For example, the media doesn’t discuss the concept of algorithms making decisions, an advancement which holds the potential for discrimination. This is an omnipresent issue today. These algorithms choose what appears in a Facebook feed, and gives recruiters CVs from candidates with the right qualifications for the job being offered. They even suggest to American police officers whether a suspect is potentially too dangerous to be let free while awaiting a trial.

Lipton posits that it’s imperative for these algorithms to act in an optimal and unbiased manner. The problem is, most of time, we aren’t sure that is the case.

“At first the expert in charge of conceiving the algorithm must be unbiased. If the data is labeled in a way that reflects this bias, (ex. a racist judgement), the model, which is meant to imitate the expert, will also be biased. The algorithm’s objective can also be incorrect, for example, an article charged with finding the best articles published the night before could be end up picking the most-read articles, to the detriment of those of potentially higher quality. In fact, machine learning systems show themselves to be more discriminatory towards minorities, because they are not as well represented by the data used to train the algorithm. That leads to predictions concerning members of those same communities to be less precise than those addressing most of the population. That can have negative consequences if the point of the algorithm is to link a person to employment opportunities or verify his/her financial solvency.”

In her work Weapons of maths destruction, Data scientist Cathy O’Neil points out the risks posed by predictive algorithms citing their tendency to reinforce discrimination and inequality.

Another potential danger of artificial intelligence according to Lipton: robotic weapons, which are already being used.

“Drones already make victims. Today they are controlled by humans but a part of the process could be easily automated. Maintaining stability of a drone in flight, for example, doesn’t require human intervention. One can thus imagine drones patrolling over a region, with face-recognition technology permitting them to identify and eliminate their targets. This technology is already available today with new research in progress. It isn’t difficult to imagine the potential: an automated weapons race with drones submitted to the same biases as algorithms, and ethical questions that will arise when robots that rely on statistical calculations get to decide who gets to live and who doesn’t.”

Furthermore, Zackary Chase fears the potential impact that these developments might have on employment.

“It would be progressive. In the near future, it is possible that robots and technological systems will replace humans in the industrial, transportation and communication sectors. In the long term, the medical sector (primarily radiology) will be equally affected by automation.”

On this final point, futurist Jerry Kaplan says that the problem isn’t, as we often hear, the potential number of jobs destroyed but rather the rapid pace at which they will disappear.

“Over half of the jobs that existed in the United States 100 years ago have disappeared. Just as with climate change, the problem isn’t the phenomenon itself but rather its speed, and the speed at which we will be able to adapt to it.”

James Bessen, author of Learning by doing: the real connection between innovation, wealth and wage, sees the necessity of adapting education to this new given, by making people polyvalent and giving them the ability to quickly acquire new competencies, rather than focusing on a sole domain of expertise. According to Bessen, life-long learning and professional development through training and online courses would also be necessary to adapt to this brave new technological world.

For his part, Lipton has thrown himself into writing a series of articles dedicated to deconstructing the misconceptions surrounding AI. One of his first pieces, published April 1st, is a satire on the principal tenants of the singularity, and it’s worth a read.

Any reaction? Leave us a comment!